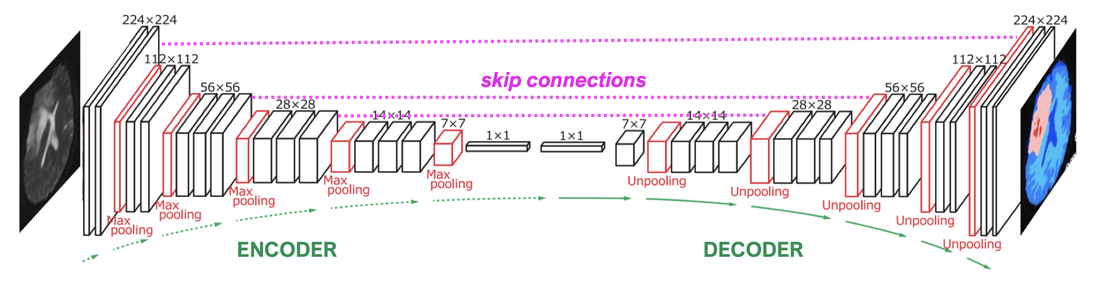

Encoder-decoder networks are a specific form of CNN widely used for image segmentation, co-registration, and artifact reduction. They typically have a "U-configuration", with an initial contracting path (the encoder) followed by an expansion path (the decoder). Full dimensional images input into the encoder portion are reduced in size via a CNN-type mechanism into lower dimensional data, thus compressing the feature maps. These maps are then re-expanded in the decoder portion back to full size containing the most meaningful features. Loss of spatial resolution can be overcome by inserting skip connections between the sides to pass through important details to the output image. The U-net network (pictured below) and its variants are the most widely used encoder-decoder networks for medical imaging.

Nodes in an RNN can feed recent outputs back

Nodes in an RNN can feed recent outputs back onto themselves or others

RNNs differ from standard "feed forward" neural networks in that they contain data feedback loops. This feedback serves as a type of "memory" allowing them to use recent outputs as updated inputs for subsequent calculations. RNNs are useful in the analysis of sequentially acquired data, including time series and speech processing. For example, the location of the cardiac septum on cine MRI depends on its immediately prior position; the next word in a radiology report depends on the previous word.

|

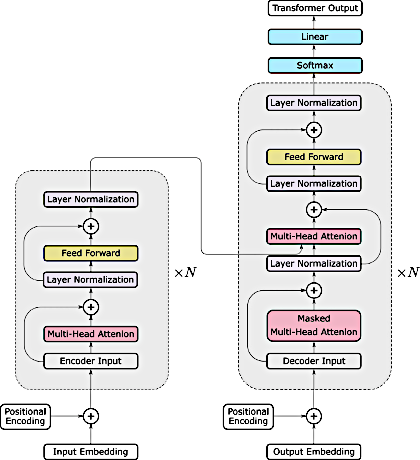

Transformer Neural Networks (TNNs)

TNNs, also known simply as Transformers, were developed in 2017 by the Google Brain research group to improve natural language processing. The wildly popular application, ChatGPT, is based on a transformer architecture. (The letters GPT stand for "Generative Pre-trained Transformer".) Many transformer variations exist, including hybrid forms with CNNs or GANs.

|

|

Typical Transformer architecture with encoder on left and decoder on right.

Typical Transformer architecture with encoder on left and decoder on right.(From He et al under CC-BY)

Two other characteristic features of Transformer Networks are the use of positional encoding and self-attention. Positional encoding refers to the indexing of words or image segments by original location as they pass through the network. Self-attention refers to the ability of the network to identify the most important features in a data set and their relationships to other data.

Advanced Discussion (show/hide)»

More information about Transformer Networks in Imaging

While transformer networks were initially introduced for natural language processing, they have also been adapted for image processing tasks. The adaptation of transformer networks for image processing is referred to as vision transformers (ViTs).

The key idea behind ViT is to break down an image into patches and treat them as sequences of input tokens similar to the words in a sentence. Each patch is represented by a feature vector obtained through a convolutional neural network (CNN). These feature vectors are then linearly projected to the same dimension as the word embeddings in the original transformer network. The resulting patch embeddings are treated as input tokens for the transformer network.

The transformer network is then applied to the sequence of patch embeddings, similar to the way it is applied to the sequence of word embeddings in natural language processing. The self-attention mechanism is used to model the dependencies between patches, allowing the network to capture global context information from the entire image. A multi-head self-attention mechanism is also used to allow the network to attend to different parts of the image.

After the self-attention mechanism, the patch embeddings are passed through a feedforward neural network to produce the final output. The output can be used for a variety of image processing tasks, such as image classification or object detection.

ViTs have shown promising results in image processing tasks and has achieved state-of-the-art performance on several benchmarks, particularly in scenarios where the available training data is limited. However, ViT requires more memory and computational resources than traditional CNN-based models, and it may not perform as well on tasks that require fine-grained spatial information, such as segmentation tasks.

Ali A. Convolutional neural network (CNN) with practical implementation. Wavy AI Research Foundation. Downloaded from https://medium.com/machine-learning-researcher 20 Jan 2022

Ali A. Recurrent neural network and LSTM with practical implementation. Wavy AI Research Foundation. Downloaded from https://medium.com/machine-learning-researcher 20 Jan 2022.

Chartrand G, Cheng PM, Vorontsov E, et al. Deep learning: a primer for radiologists. RadioGraphics 2017; 37:2113-2131. [DOI LINK]

Cheng PM, Montagnon E, Yamashita R, et al. Deep learning: an update for radiologists. RadioGraphics 2021; 41:1427-1445. [DOI LINK]

Dosovitskiy L, Beyer L, Kolesnikov A, et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. ArXiv abs/2010.11929 (2021)

Giacaglia G. How transformers work. Towards Data Science. 10 Mar 2019

He K, Gan C, Li Z, et al. Transformers in medical image analysis. Intelligent Medicine 2023; 3:59-78. [DOI LINK]

Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z Med Phys 2019; 29:102-127. [DOI LINK]

Maier A, Syben C, Lasser T, Riess C. A gentle introduction to deep learning in medical image processing. Z Med Pay 2019; 29:86-101. [DOI LINK]

Moawad AW, Fuentes DT, ElBanan MG, et al. Artificial intelligence in diagnostic radiology: where do we stand, challenges, and opportunities. J Comput Assist Tomogr 2022; 46:78-90. [DOI LINK]

Nicholson C. A beginner’s guide to LSTMs and recurrent neural networks. In: A.I. Wiki. A beginner’s guide to important topics in AI, machine learning, and deep learning. (Downloaded from wiki.pathmind.com 13 Jan 2022)

Siddique N, Paheding S, Elkin CP, Devabhaktuni V. U-Net and its variants for medical image segmentation: a review of theory and applications. IEEE Access 2021:82031-82057. [DOI LINK]

Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. (2017-6-12). arXiv1706.03762. [DOI LINK] (Original paper describing Transformers)

Willemink MJ, Roth HR, Sandfort V. Two foundational deep learning models for medical imaging in the new era of Transformer Networks. Radiology: Artificial Intelligence 2022; 4(6):e210284. [DOI LINK]

Wolterink JM, Mukhopadhyay A, Leiner T, et al. Generative adversarial networks: a primer for radiologists. RadioGraphics 2021; 41:840-857. [DOI LINK]

Yi Xin, Babyn PS. Generative adversarial network in medical imaging: a review. Medical Image Analysis 2019; 58:101552. [DOI LINK]

Is artificial intelligence the same as machine learning?

I still don't understand how machines learn. How do they "reprogram" themselves?