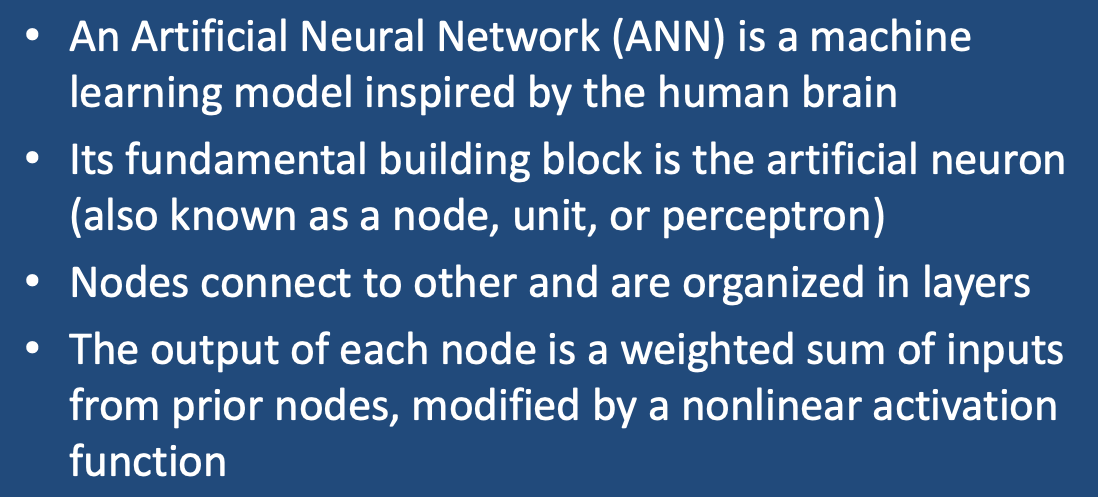

An artificial neural network (AAN) is a type of machine learning model inspired by the structure of the human brain. It consists of a set of interconnected processing nodes (artificial neurons), organized into layers that work together. The initial layer receives input data and the final layer produces the output. In between the input and output layers are one or more hidden layers. The hidden layers perform a series of nonlinear transformations on the input data, allowing the network to learn complex patterns in the data.

AANs with just a small number (1-3) hidden layers are known as shallow networks; those with many more layers are called deep networks.

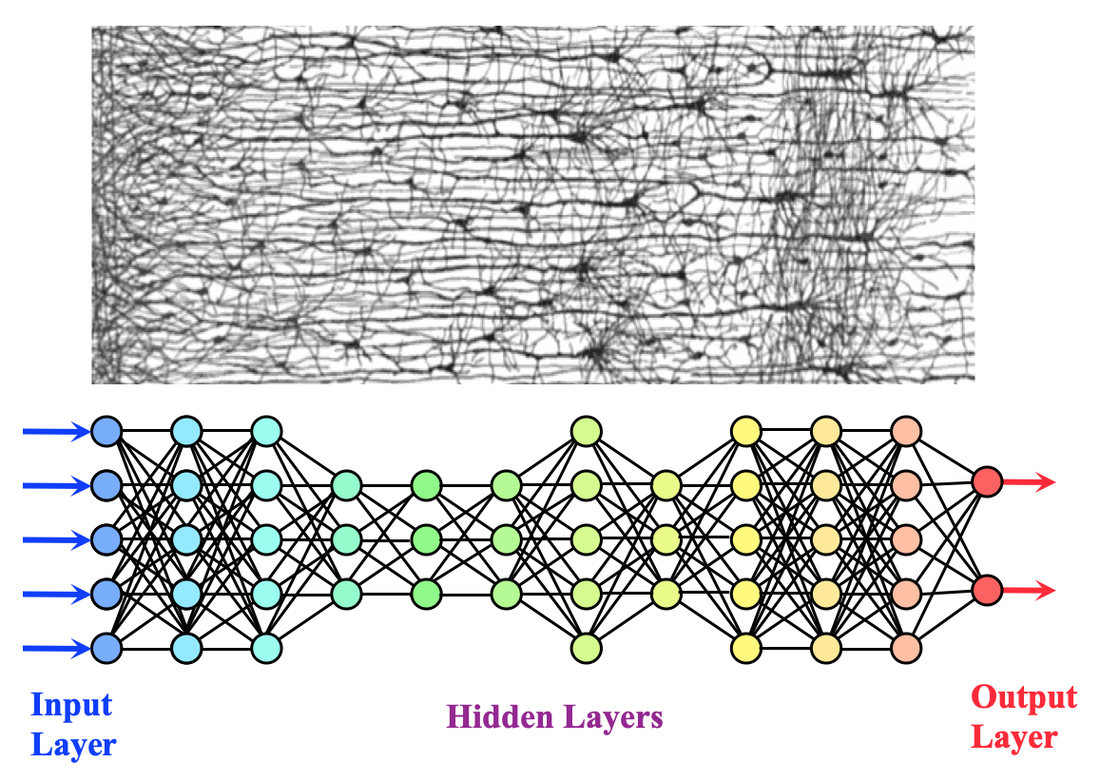

The fundamental component of artificial neural networks, loosely modeled after biological nerve cells, is called the perceptron, alternatively known as an (artificial) neuron, unit, or node. In network diagrams like the one above, individual neurons are represented by colored circles.

|

A biological nerve cell receives input stimuli from neighboring nerves through its dendrites. If the sum of these stimuli is sufficient to create membrane depolarization in the neuron's cell body, an electrical output signal will be transmitted down the axon to its terminals (which in turn may stimulate dendrites of other nerves).

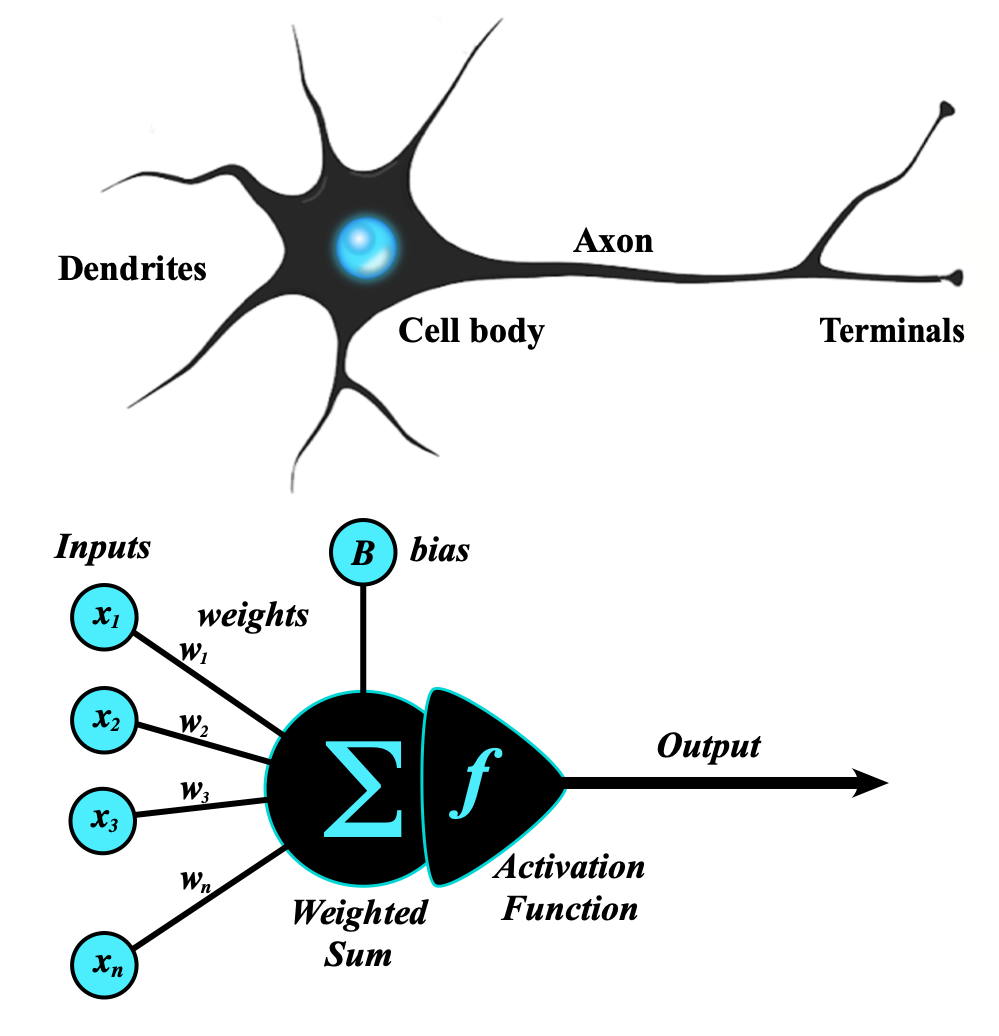

The artificial neuron receives a set of weighted inputs (w1x1 + w2x2 + … + wnxn) plus a constant bias (B). This weighted sum is then fed into an activation function that produces an output for the node. |

Common activation functions:

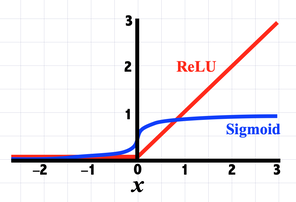

Common activation functions: Logistic sigmoid = 1/(1+e−x) and ReLU = max(0,x)

The purpose of the activation function is to introduce nonlinearities between units. Without this, the output of all neuron groups would simply be linear combinations of the others and the more interesting and powerful behaviors of neural networks would not be possible. The most common forms of activation functions are steps, ramps, and sigmoids. The most widely used is the Rectified Linear Unit (ReLU) function that allows only positive sums to pass through. The logistic sigmoid function, popular in the past, has fallen out of favor because of its saturation at larger input values. A dozen examples of commonly used activation functions in graphical format can be seen here. Usually the same activation function is used for all nodes of a network, except for the final output node(s).

The AAN diagram above constitutes a simple feed forward network, where the outputs of nodes from one layer become inputs for the next layer. Furthermore, the output of every node passes to every node in the next layer, making this a fully connected network. However, many other configurations are possible. For example, the output of one node may pass back to itself (a recurrent network) or other nodes in the same layer. Alternatively, the output from one node may skip one or more layers or pass to nodes in multiple layers (a dense network). Some of these concepts are further described in the YouTube video below:

Advanced Discussion (show/hide)»

No supplementary material yet. Check back soon!

References

Brunel N, Hakim V, Richardson MJE. Single neuron dynamics and computation. Curr Opin Neurobiol 2014; 25:149-155. [DOI LINK] (The simple neural model embodied in the perceptron dates from 1943. It’s a lot more complicated than this! Plus a single neuron may have over 100,000 direct connections to others.)

Erickson BJ, Korfiatis, P, Akkus Z, Kline TL. Machine learning for medical imaging. RadioGraphics 2017; 37:505-515. [DOI LINK]

European Society of Radiology. What the radiologist should know about artificial intelligence - an ESR white paper. Insights into Imaging 2019;1:44.

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015; 521:437-444. [DOI LINK]

Lee D, Lee J, Ko J, et al. Deep learning in MR image processing. iMRI 2019; 23:81-99. [DOI LINK]

McCulloch WS, Pitts WH. A logical calculus of the ideas immanent in nervous activity. Bull Math Biophysics 1943; 5:115-133. (Original paper describing the perceptron).

Nicholson C. A beginner’s guide to neural networks and deep learning. In: A.I. Wiki. A beginner’s guide to important topics in AI, machine learning, and deep learning. (Downloaded from wiki.pathmind.com 13 Jan 2022)

Brunel N, Hakim V, Richardson MJE. Single neuron dynamics and computation. Curr Opin Neurobiol 2014; 25:149-155. [DOI LINK] (The simple neural model embodied in the perceptron dates from 1943. It’s a lot more complicated than this! Plus a single neuron may have over 100,000 direct connections to others.)

Erickson BJ, Korfiatis, P, Akkus Z, Kline TL. Machine learning for medical imaging. RadioGraphics 2017; 37:505-515. [DOI LINK]

European Society of Radiology. What the radiologist should know about artificial intelligence - an ESR white paper. Insights into Imaging 2019;1:44.

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015; 521:437-444. [DOI LINK]

Lee D, Lee J, Ko J, et al. Deep learning in MR image processing. iMRI 2019; 23:81-99. [DOI LINK]

McCulloch WS, Pitts WH. A logical calculus of the ideas immanent in nervous activity. Bull Math Biophysics 1943; 5:115-133. (Original paper describing the perceptron).

Nicholson C. A beginner’s guide to neural networks and deep learning. In: A.I. Wiki. A beginner’s guide to important topics in AI, machine learning, and deep learning. (Downloaded from wiki.pathmind.com 13 Jan 2022)

Related Questions

Is artificial intelligence the same as machine learning?

Is artificial intelligence the same as machine learning?