Don't be discouraged. The General Linear Model (GLM) can be daunting to those with little background in statistics or matrix algebra, but I'll try to make it more understandable here. This is a worthwhile endeavor, as GLM has been the most widely used technique for analyzing task-based fMRI experiments for the past 25 years and is the default method provided by vendors for their clinical fMRI packages.

GLM can be thought of as an extension of a more familiar statistical technique: linear regression. Linear regression, sometimes called trend-line analysis, is a method used to calculate the "best fitting" line for a set of experimental data. Linear regression software is widely available in popular graphics and spreadsheet packages such as Microsoft Excel.

Simplified linear regression example, adapted from

data of Hansen et al (2004)

As an application of linear regression, let us examine how the mean fMRI signal (Y) of a voxel in the visual cortex responds to increasing levels of retinal illumination (X). Plotting our data on a graph (left) we discern a fairly linear relationship between X and Y. Linear regression software computes the “best fitting” straight line for this data. For each data point (xi, yi) the relationship can be written yi = xiβ + εi, where β is the calculated slope of the line and εi is the calculated error (or residual) distance between the line and corresponding data point. Note that the xi values are determined by the design of the experiment, the yi values are measured, while β and the εi's are calculated by the software.

A required assumption for linear regression is that the errors (εi) are random and independent, following a Gaussian distribution with mean of zero. The “best fitting” line is defined to be the one that minimizes the sum of the squared errors (ε1² + ε2² + ε3² + …) between the points and the chosen line. Note that although the calculated slope (β) is the same for each data point (xi, yi), the individual errors (εi) will differ.

Our simple linear regression example has actually produced n separate equations, each of the form yi = xiβ + εi, where n is the number of data points acquired for each time interval i. Matrix algebra allows us to condense this set of equations into a more manageable form. We do this by defining Y, X, and ε as "column vectors", each containing a list of individual measured or calculated values. Specifically,

If you are not familiar with matrix algebra or need a simple review, check out Coolmath.com

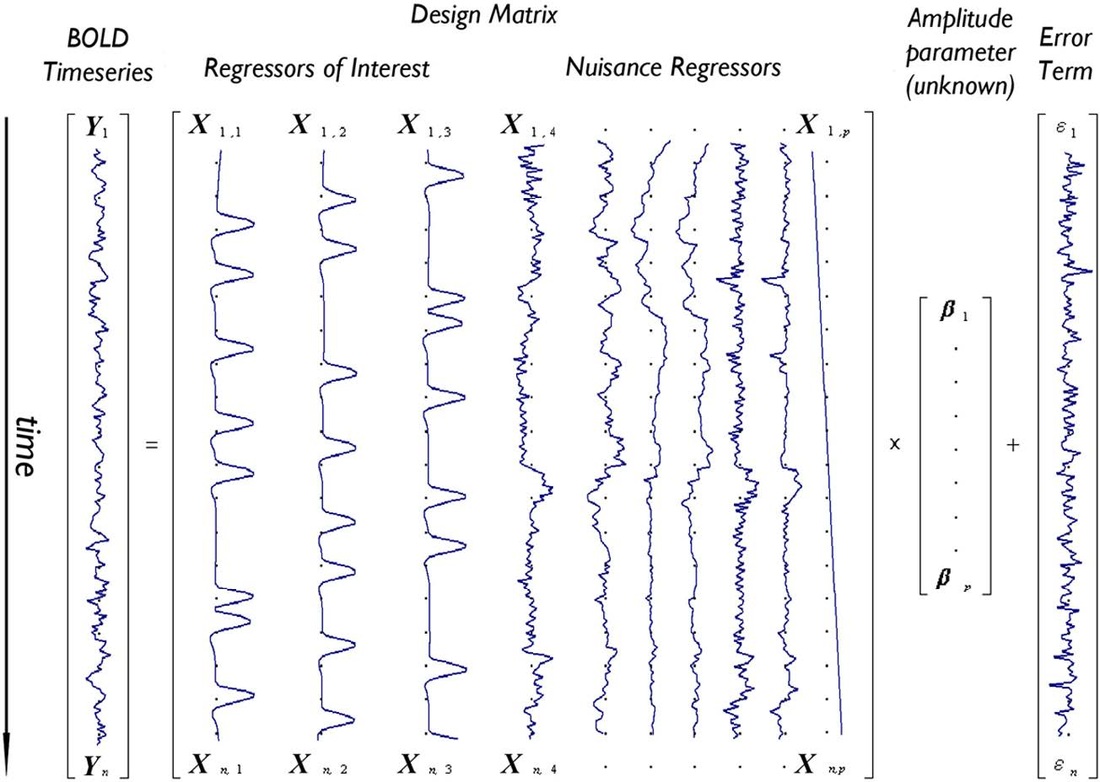

The General Linear Model (GLM) representation of an fMRI experiment retains the same basic form (Y = Xβ + ε) as does our simple linear regression example. Stated in words, the GLM says that Y (the measured fMRI signal from a single voxel as a function of time) can be expressed as the sum of one or more experimental design variables (X), each multiplied by a weighting factor (β), plus random error (ε).

In GLM both Y and ε remain as single column vectors containing fMRI signal data (yi) or error estimates (εi) respectively for a single voxel at successive time points (i = 1 to n). The experimental design matrix (X), however, is typically much more complex, consisting of perhaps 5-10 columns instead of only one. Each new column of X would be constructed by the investigator to reflect a specific factor (“regressor”) thought to influence the outcome of the experiment.

In GLM both Y and ε remain as single column vectors containing fMRI signal data (yi) or error estimates (εi) respectively for a single voxel at successive time points (i = 1 to n). The experimental design matrix (X), however, is typically much more complex, consisting of perhaps 5-10 columns instead of only one. Each new column of X would be constructed by the investigator to reflect a specific factor (“regressor”) thought to influence the outcome of the experiment.

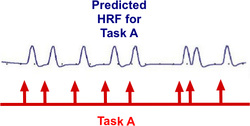

Essential regressors (also known as regressors of interest) are a set of idealized predictions of what the hemodynamic response function (HRF) should look like if a voxel of interest became activated due to a task or stimulus. For example, consider a simple event-related fMRI experiment where a subject is given three tasks (A, B, and C) — such as listening to sounds at three different frequencies presented in random order. if the stimuli for Task A were applied at times denoted by the red arrows above, the predicted response of a receptive voxel in the auditory cortex might be a train of slightly-delayed, short-duration HRFs as illustrated. Numerical values (X i,1) corresponding time points of this predicted response pattern to Task A would then be transferred to and listed vertically in the first column of the GLM design matrix (illustrated below). The predicted responses to tasks B and C would likewise be created and used to fill the second and third columns of the design matrix (X).

The last 7 columns of our design matrix example are considered covariates or nuisance regressors – experimental factors that confound the analysis (such as head motion or signal drifts) but which are of no particular interest by themselves. The data filling these columns may be empirically determined (e.g., actual measurements of head translations and rotations obtained during the experiment) or modeled (e.g., by a linear trend or oscillating basis functions).

Because the design matrix (X) now contains multiple columns, the weighting parameter (β) will no longer be a single number as it was in the simple linear regression example. Fitting data in a full GLM experiment means that β will now become a column vector with values (β1, β2, …, βp), each reflecting the relative contribution of each design factor (Xi) to the fMRI signal. Hence the amplitude vector (β) must have the same number of rows as the design matrix (X) has columns.

Depiction of the General Linear Model (GLM) for a voxel with time-series Y predicted by a design matrix X including 10 effects (three regressors of interest – e.g., tasks A,B,C – and seven nuisance regressors – e.g., six motion parameters and one linear drift). Calculated weighting factors (β1 − β10) corresponding to each regressor are placed in amplitude vector β while column vector ε contains calculated error terms (εi) for the model corresponding to each time point i. (From Monti, 2011, under CC BY license)

Two major assumptions are inherent to basic GLM analysis, either one of which may be rightfully challenged. First, GLM is a univariate approach, calculating statistics on a voxel-by-voxel basis and assuming that signals from each voxel are independent of one another. Secondly, the model assumes that the errors are random and independent, following a Gaussian distribution with mean of zero.

Statistical Parametic Map (SPM)

Statistical Parametic Map (SPM)overlaid on anatomic image

With fMRI data collected and stored in the Y vector and experimental design factors specified in the X matrix, components of the β and ε vectors are then computed using classical or Bayesian approaches. These results can then be statistically interrogated, testing various hypotheses about the regressors alone or in combination. The final output is usually a Statistical Parametric Map (SPM) or Posterior Probability Map (PPM) that is overlaid on an anatomic image or template.

Needless to say, GLM analysis and processing is very complex, and the interested reader should refer to the references provided below as well as to material in the Advanced Discussion.

Needless to say, GLM analysis and processing is very complex, and the interested reader should refer to the references provided below as well as to material in the Advanced Discussion.

Advanced Discussion (show/hide)»

Modeling the Hemodynamic Response Function (HRF)

HRF Models

HRF Models

How, exactly should the shape of the HRF be determined for a given fMRI experiment? The term "canonical" HRF refers to the default shape selected by an investigator prior to data collection. Choices include a simple gamma function, a difference of two gamma functions (to allow for post-stimulus undershoot), Fourier basis sets, basis sets consisting of "plausible" HRF shapes, and finite impulse response functions. For improved accuracy the 1st and 2nd time derivatives of the HRF (commonly referred to as the "temporal" and "dispersion" derivatives respectively) may be added to the model. Use of these derivatives is equivalent to performing a second order Taylor series approximation of the HRF function. Including the 1st derivative allows timing of the peak response to be adjusted while the 2nd derivative allows the width of the HRF to vary.

To create a regressor, the chosen shape of the HRF is convolved with ("multiplied by") the experimental design. Nonlinear interactions among events that distort the expected HRF (such as two stimuli applied in a very short time frame where the total response may be less than the sum of the individual responses) may also be modeled using Volterra kernels or other methods.

To create a regressor, the chosen shape of the HRF is convolved with ("multiplied by") the experimental design. Nonlinear interactions among events that distort the expected HRF (such as two stimuli applied in a very short time frame where the total response may be less than the sum of the individual responses) may also be modeled using Volterra kernels or other methods.

Testing for Statistical Significance

The ultimate purpose of most fMRI experiments is to determine how the brain responds to various stimuli and conditions. For task-based fMRI studies the calculated amplitude vector β from GLM provides estimates (βi) of the relative weights ("importance") for each component (Xi) in the experimental design.

We begin with one of the simplest of all fMRI experiments: finger tapping. Here the subject alternately cycles between tapping her fingers and resting while BOLD responses over the entire brain are recorded. In this example the design matrix might contain just a single essential regressor denoted: X1 = "finger tapping". After data collection, GLM analysis produces an estimate (β1) of the amplitude response to finger tapping for each voxel. Voxels near the motor cortex would demonstrate strong responses with relatively large values of β1, while far-away voxels might only contain resting state "noise". So what criteria could we use to determine when we consider a voxel to be truly activated?

We begin with one of the simplest of all fMRI experiments: finger tapping. Here the subject alternately cycles between tapping her fingers and resting while BOLD responses over the entire brain are recorded. In this example the design matrix might contain just a single essential regressor denoted: X1 = "finger tapping". After data collection, GLM analysis produces an estimate (β1) of the amplitude response to finger tapping for each voxel. Voxels near the motor cortex would demonstrate strong responses with relatively large values of β1, while far-away voxels might only contain resting state "noise". So what criteria could we use to determine when we consider a voxel to be truly activated?

The formal/classical method of testing for statistical significance involves constructing a null hypothesis (Ho), which in this case might be written in words as "There is no net BOLD signal effect in a voxel during finger tapping compared to rest." In mathematical terms, this could be expressed as:

Ho: β1 = 0.

Ho: β1 = 0.

Typically null hypotheses are constructed in a negative/perverse way — i.e., that no fMRI effect is produced by a given event or action. In reality, however, we secretly wish to be surprised and find a result so unlikely to occur by chance that we can reject the null hypothesis.

By "statistically significant" we usually mean that the Type I (false positive) error of rejecting the null hypothesis is less than a certain arbitrary level of probability (p-value), perhaps p ≤ 0.05. In other words, we might call a result "significant" only when the probability of such an event happening by chance occurs less than 5% of the time.

Comparison between t- and z-distributions. As degrees of freedom (df) increase, the two converge.

Comparison between t- and z-distributions. As degrees of freedom (df) increase, the two converge.

The GLM calculation produces both an estimate for β1 and a standard deviation (SD) of this estimate. The SD depends on the sum of the mean squared errors as well as the structure and size of the design matrix (X). To test the null hypothesis, we form the test statistic T = β1/SD. Provided the assumptions of the GLM are maintained (i.e., errors are independent and Gaussian with mean zero), the statistic T follows a Student's t-distribution from which p-value percentiles can be determined. The precise shape of the t-distribution depends on the degrees of freedom (df), defined to be the number time points analyzed minus the number of independent regressors. The t-distribution is closely related to the Gaussian or standard normal (z-) distribution, converging to the latter as the df → ∞. In experiments with more than 100 observations, Z-values and percentiles are often reported instead of T-values, since the two are very close.

Multiple Regressors: Contrasts and F-tests

The finger-tapping example above contained only a single covariate of interest (X1 = "finger tapping") and its corresponding estimated amplitude (β1). Most interesting fMRI studies, however, include several regressors of interest. Let us consider a more complicated fMRI experiment wherein a subject is alternately shown red, blue, and green lights, alone or in combination. Three essential regressors of the design might be: X1 = "red light on", X2 = "blue light on", and X3 = "green light on". After data collection, the GLM would generate three amplitude estimates (β1, β2, and β3) corresponding to the three conditions that could be tested for statistical significance.

Multiple design variables allow combination effects to be investigated. For example, we might want to know whether a voxel was equally activated by the red light and blue light (β1= β2), or equivalently (β1 − β2 = 0). Or perhaps whether the average of red and blue illumination has the same effect as green illumination alone (½ [β1 + β2 ] = β3) , or equivalently (½β1 + ½β2 − β3 = 0).

These combinations are difficult to express in words, but can handily be represented in matrix form, multiplying each calculated amplitude vector β by a linear contrast vector cT. The two contrasts described above can be written as:

Even more complex hypothesis tests involving these multiple regressors could be imagined. For example, are the three means all equal to zero simultaneously? Or does a certain combination of lights lead to a significantly higher activity than another combination? In these cases, the contrast vector becomes a full matrix rather than a linear array of numbers, and the GLM procedure blossoms into a full analysis of variance (ANOVA). The applicable test statistic now becomes F instead of T, where F is formed by dividing the sum of squared residuals under a reduced model (without regressors) by the sum of squared residuals under the full model. Provided the usual GLM assumptions of identical and independent distribution of errors are preserved, the statistic F is distributed as according to the F-distribution which may used to test for statistical significance of hypotheses.

Autocorrelation of Data

One of the basic tenets of GLM — statistical independence of errors from point to point — is nearly always violated in an fMRI experiment. Functional MRI studies are not random collections of data but time series, with high values more likely to follow high values and low values more likely to follow low values. Quasi-periodic noise sources (such as cardiac and respiratory pulsations) also contribute to this phenomenon.

Fortunately, the presence of serial correlation does not affect estimates of the weighting parameters (βi). However, it does produce an underestimation of the error variance because the number of truly independent observations (the "effective" degrees of freedom, df) will be lower than the actual number of observations made. Thus t- or F-statistics, the shapes of whose distributions depend on df, will be artificially inflated, potentially resulting in elevated false positive rates and spurious inferences.

The most common method to control for autocorrelations is known as pre-whitening. The term "pre-whitening" refers to removing serial correlations in time series data so that its residuals have a more uniform distribution of frequencies similar to "white noise". Pre-whitening is performed through an iterative process. An initial GLM solution is first generated and the autocorrelation structure of the residuals is fit to a first or second order Auto-Regressive Model [AR(1) or AR(2)]. The original raw data is then adjusted by removing the estimated covariance structure and a second GLM performed on the "whitened" data.

Fortunately, the presence of serial correlation does not affect estimates of the weighting parameters (βi). However, it does produce an underestimation of the error variance because the number of truly independent observations (the "effective" degrees of freedom, df) will be lower than the actual number of observations made. Thus t- or F-statistics, the shapes of whose distributions depend on df, will be artificially inflated, potentially resulting in elevated false positive rates and spurious inferences.

The most common method to control for autocorrelations is known as pre-whitening. The term "pre-whitening" refers to removing serial correlations in time series data so that its residuals have a more uniform distribution of frequencies similar to "white noise". Pre-whitening is performed through an iterative process. An initial GLM solution is first generated and the autocorrelation structure of the residuals is fit to a first or second order Auto-Regressive Model [AR(1) or AR(2)]. The original raw data is then adjusted by removing the estimated covariance structure and a second GLM performed on the "whitened" data.

Multi-Subject Analysis

Up to now we have discussed the GLM analysis of data from individual subjects only. To make fMRI conclusions more generalizable, individual results are often aggregated across multiple subjects or groups. Before statistical analysis is performed, however, the data from the multiple subjects must be warped into a common anatomic space (such as Talairach or MNI templates). This process is called normalization and has been described in a prior Q&A.

One of the earliest approaches for group analysis was simply to warp the data from all subjects into a normalized space and then concatenate it into one gigantic time series (as though the data were all recorded from a single "super" subject). This so-called fixed effects analysis has been largely abandoned because it does not adequately account for individual differences and cannot cleanly be used for making population inferences.

Multi-subject fMRI data is intrinsically hierarchical with at least two levels that must be considered: the individual and the group. A mixed-effect analysis is therefore more appropriate where errors are measured and considered both within and between subjects.

One method for doing this is a two-level GLM analysis. At the first level a standard GLM is performed separately for each subject. For the second level, however, only the βi values (not the full fMRI response data) are carried through where they become dependent variables. Second-level inferences can be difficult because the βi's are only estimates and their values are "contaminated" by variance from the first-level analysis. Several methods to handle this problem exist, one of the most popular being the restricted maximum likelihood technique using an expectation-maximization algorithm.

References

Hansen KA, David SV, Gallant JL. Parametric reverse correlation reveals spatial linearity of retinotopic human V1 BOLD response. NeuroImage 2004; 23:233-241. (This experiment is the model used for our simplified linear regression example above)

Monti MM. Statistical analysis of fMRI time-series: a critical review of the GLM approach. Front Hum Neurosci 2011; 5(28):1-13, doi: 10.3389/fnhum.2011.00028

Poline J-B, Brett M. The general linear model and fMRI: Does love last forever? Neuroimage 2012; 62:871-880.

Student. The probable error of a mean. Biometrika 1908; 6:1-25. (The original paper by "Student", a pseudonym used by William Sealy Gosset who wished to remain anonymous due to his work in quality assurance of small samples at the Guinness Brewery in Dublin. The letter "t" associated with Student's distribution was supplied by English statistician R. A. Fisher in 1925).

The FIL Methods Group. SPM12 Manual. Wellcome Trust Center for Neuroimaging, London, 2014.

Hansen KA, David SV, Gallant JL. Parametric reverse correlation reveals spatial linearity of retinotopic human V1 BOLD response. NeuroImage 2004; 23:233-241. (This experiment is the model used for our simplified linear regression example above)

Monti MM. Statistical analysis of fMRI time-series: a critical review of the GLM approach. Front Hum Neurosci 2011; 5(28):1-13, doi: 10.3389/fnhum.2011.00028

Poline J-B, Brett M. The general linear model and fMRI: Does love last forever? Neuroimage 2012; 62:871-880.

Student. The probable error of a mean. Biometrika 1908; 6:1-25. (The original paper by "Student", a pseudonym used by William Sealy Gosset who wished to remain anonymous due to his work in quality assurance of small samples at the Guinness Brewery in Dublin. The letter "t" associated with Student's distribution was supplied by English statistician R. A. Fisher in 1925).

The FIL Methods Group. SPM12 Manual. Wellcome Trust Center for Neuroimaging, London, 2014.

Related Questions

How are those activation "blobs" on an fMRI image created, and what exactly do they represent?

How are those activation "blobs" on an fMRI image created, and what exactly do they represent?